How Mean Opinion Score Ratings Improve Text-To-Speech Models

Increase the Confidence Level of Your Text-To-Speech Models With Higher Mean Opinion Score Ratings

Mean Opinion Score (MOS) is a recognised method for speech quality evaluation of a text-to-speech model, providing a subjective analysis that captures the human perspective of a text-to-speech model. The main goal is to provide a natural and seamless experience for customers and end users, making it easier for them to access information and communicate with digital devices.

Why TTS Quality is Important

Text-to-speech (TTS) technology is important for the modern world because it enables computers and other digital devices to communicate with people in a natural and efficient way. It provides an opportunity to make digital content accessible by converting written text into spoken language. It also provides an opportunity to improve technology that automates tasks in call centres and customer service functions.

TTS technology is advancing rapidly and is already transforming the way we interact with digital devices in the world today. We are already using technology to access information, to communicate and even when playing online. For this reason, knowing the confidence level of your TTS model is key to improving the user experience.

Why MOS is Used to Rate the Confidence Level of a TTS Model

Developers use MOS (Mean Opinion Score) to rate the confidence level of their Text-to-Speech (TTS) systems as it provides a way to measure the perceived quality of artificial speech.

MOS ratings are used to evaluate the accuracy and naturalness of artificial speech and allow developers to better understand how their TTS system is performing from the perspective of human listeners. This can help them identify issues that may be difficult to detect through automated testing and improve the overall quality of the system.

How the MOS is Calculated

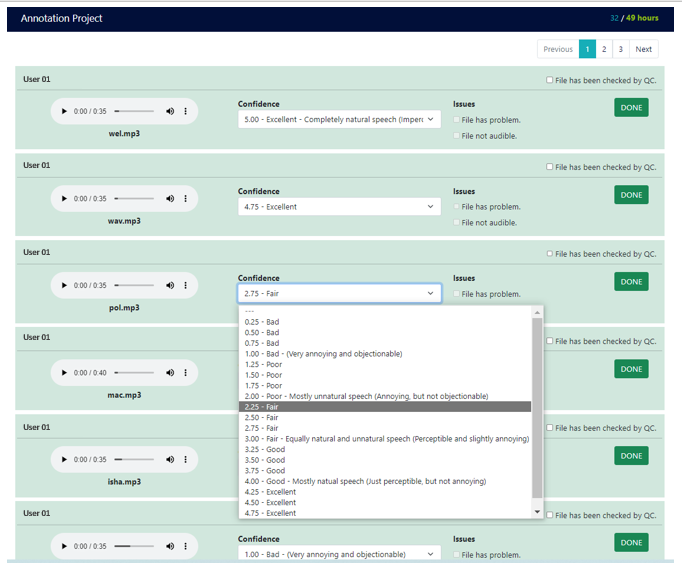

Human involvement remains the most effective way to evaluate the success of a text-to-speech model by using the mean opinion score ratings. The MOS has a scale of 1 – 5, with 1 being the lowest score, meaning the audio sounds unnatural, robotic and/or annoying, and 5 being the highest score, meaning it sounds like completely natural speech and could pass for a real person!

For example, a developer needs to evaluate the performance of their TTS model before going live. The preferred method is to engage a group of participants to rate the speech generated by the TTS model using a MOS scale. Participants would rate the speech quality based on a scale from 1 to 5, where 1 is poor and 5 is excellent. The MOS score would then be calculated based on the ratings provided by the participants. A rating of 4.3 – 4.5 is considered an excellent quality score.

A high MOS score will indicate that the speech quality sounds natural and resembles human speech to participants. A low score would indicate that improvement is needed to make the output resemble a more human feel and sound more natural.

Model Veracity

It is a worthwhile exercise in improving customer satisfaction and user experience to test the speech quality of your TTS model before going live with your product. To do this, be sure to engage with a partner who has access to a diverse group of participants representing your ideal customer / end user to ensure your MOS evaluation is unbiased, representing as many interpretations of the model’s performance as possible across all demographics.

Partner Wisely – Way With Words

Way With Words has a custom-built platform that can host unlimited voice samples / audio files and conduct MOS evaluations of any size online. Due to the size of our contractor base, this has the added advantage that participants can be matched with target regions and select demographics worldwide.

Success Story: Text-To-Speech Model evaluation for Developer Client

The Challenge:

Evaluate a large volume of synthesized (artificial) speech per TTS model and rate the confidence level of each recording from a human perspective.

Rating the Confidence Level of Each Recording

Score How natural / human How Robotic

5 Excellent Completely natural speech Imperceptible

4 Good Mostly natural speech Just perceptible but not annoying

3 Fair Equally natural and unnatural Perceptible and slightly annoying

2 Poor Mostly unnatural speech Annoying, but not objectionable

1 Bad Completely unnatural speech Very annoying and objectionable

The Solution:

Way With Words put together a team as per client specification to evaluate all TTS models. Following annotator and inter-annotator agreement, the mean opinion score for each model was finalised and exported in csv format from our data annotation workflow for delivery to the client.

Final outcome:

The client was able to optimise their TTS model and improve the overall performance. This enabled the client to launch a product that had a MOS rating of 4.3, rivalling some of the big players at a fraction of the cost.

Ready to take your data annotation projects to the next level? With a proven track record of success and the ability to handle large-scale projects, we’re confident that we can help you achieve your goals. Contact us today to get started!

Additional Services

About Captioning

Perfectly synched 99%+ accurate closed captions for broadcast-quality video.

Machine Transcription Polishing

For users of machine transcription that require polished machine transcripts.